Human + AI

Goodbye guesswork.

Hello skill-based decisions.

Predictive, fair, and science-validated assessments — powered by AI.

Over 3,500 companies place their trust in us

Simple recruitment

3 simple steps

to successful recruitment

01

Assess more intelligently

Measure personality, motivation andsoft skills with reliable, scientifically validated assessments.

02

Predict what works

Use AI to identify your company's specific performance drivers.

03

Act with impact

Recruit better, retain more and manage careers with confidence.

ROI

Predict talent.

Boost performance,

measure ROI.

45%

Reduction in recruitment time

50%

Reduction in turnover

78%

Fewer recruitment errors

40%

More team productivity

Simple recruitment

Predict success, maximize ROI. Build winning teams.

AssessFirst transforms your intuition into concrete results. Our AI helps you make better HR decisions

by analyzing each candidate or employee in depth -

so you can recruit faster, reduce costs and achieve

your goals faster.

Simple recruitment

Predict talent success.

Recruit, retain, develop.

Predictive recruitment for business results

Use AI-based predictive models to assess potential success even before the first interview.

-50%

Lower employee turnover

X2.5

Higher engagement levels

+30%

Stronger team performance

55%

Time saved on every hire

Manager & team affinity analysis to reduce turnover

Go beyond the CV. Measure compatibility with teams and managers to improve loyalty.

Customized development plans to maximize the value of your talent

- Accelerate skills development

- Anticipate tomorrow's needs

- Boost engagement and retention

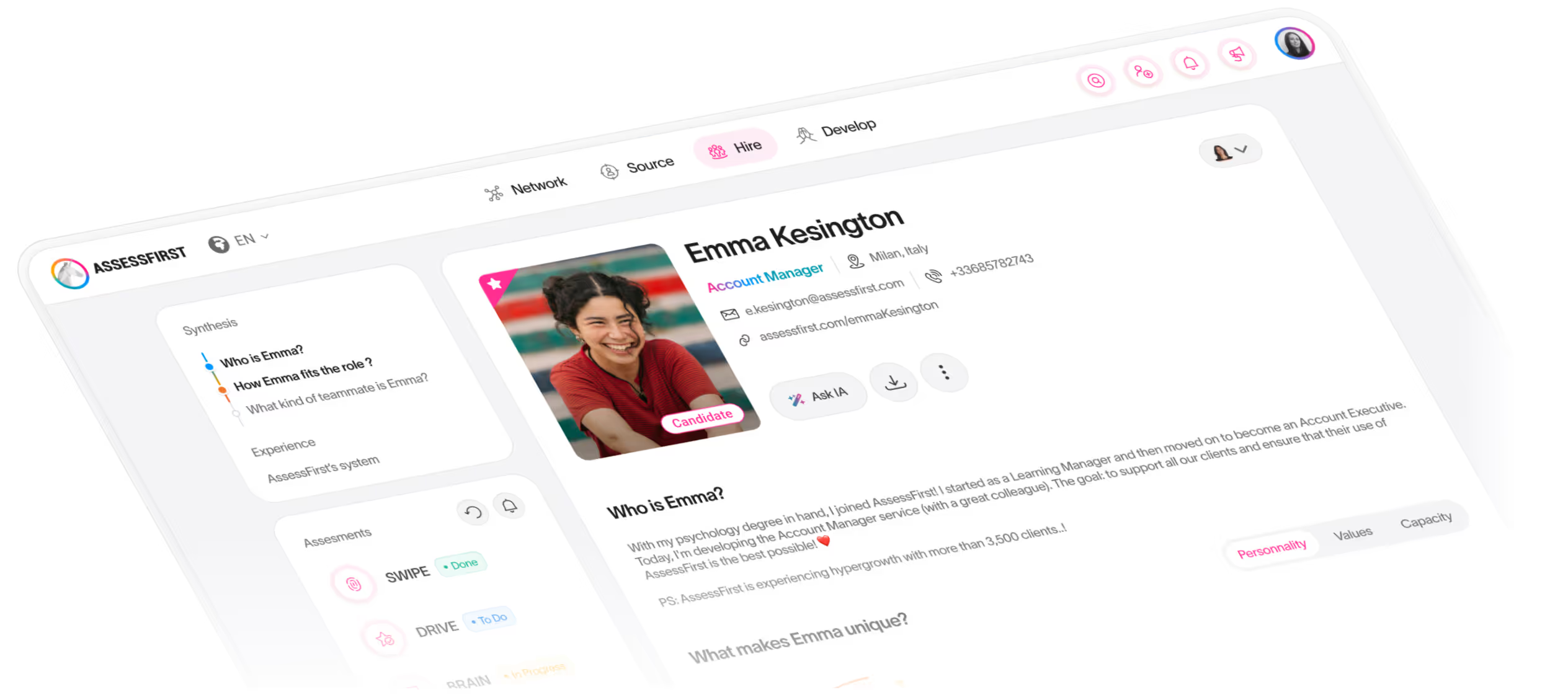

Platform

A complete platform to bring out

your talents' full potential

Soft Skills & Potentiel. Assess personality, motivation and reasoning with Swipe, Drive and Brain.

Technical & linguistic skills. Measure real skills with VOICE, our adaptive assessment engine.

"AssessFirst has transformed our recruitment by enabling us to quickly identify talent that fits our company culture. An indispensable tool."

Vicky Docker

Head of Talent @ BNP PARIBAS

Predictive models. Predict performance, engagement and manager affinity with our exclusive AI.

See the platform in action.

Integrates easily with your favoriteHR tools. AssessFirst connects toyour ATS and HRIS like Greenhouse, SmartRecruiters, Workday, etc.

We’ve built native, plug-and-play integrations with over 35 of the world’s most popular HR toolsNeed something custom?Our Open API allows you to connect AssessFirst to any system you use — quickly and securely

Security First

Enterprise-level security.

Serenity guaranteed.

Simple recruitment

Your data. Your rules.

Global compliance.

We adhere to the most stringent compliance standards to ensure the security and confidentiality of your data.

FAQ

Answers to your frequently asked questions

Are assessments objective and fair?

Yes. Our tests are designed to limit bias, guarantee equal opportunities and offer consistent decisions.

Is AssessFirst suitable for all types of recruitment?

Yes, from volume to rare profiles, from trainees to top management, in France or abroad.

What kind of insights does AssessFirst provide on candidates?

Personality, motivations, reasoning, cultural compatibility, performance prediction, development recommendations...

How does AssessFirst improve my recruitment process?

It enables you to make faster, more reliable decisions. Result: -50% recruitment errors, -45% recruitment time, + retention.

What is AssessFirst and how does it work?

AssessFirst is a predictive intelligence platform that combines behavioral science and artificial intelligence to assess and predict talent success, engagement and compatibility.